Load balancer

Load balancer¶

Source: Scalable system design patterns

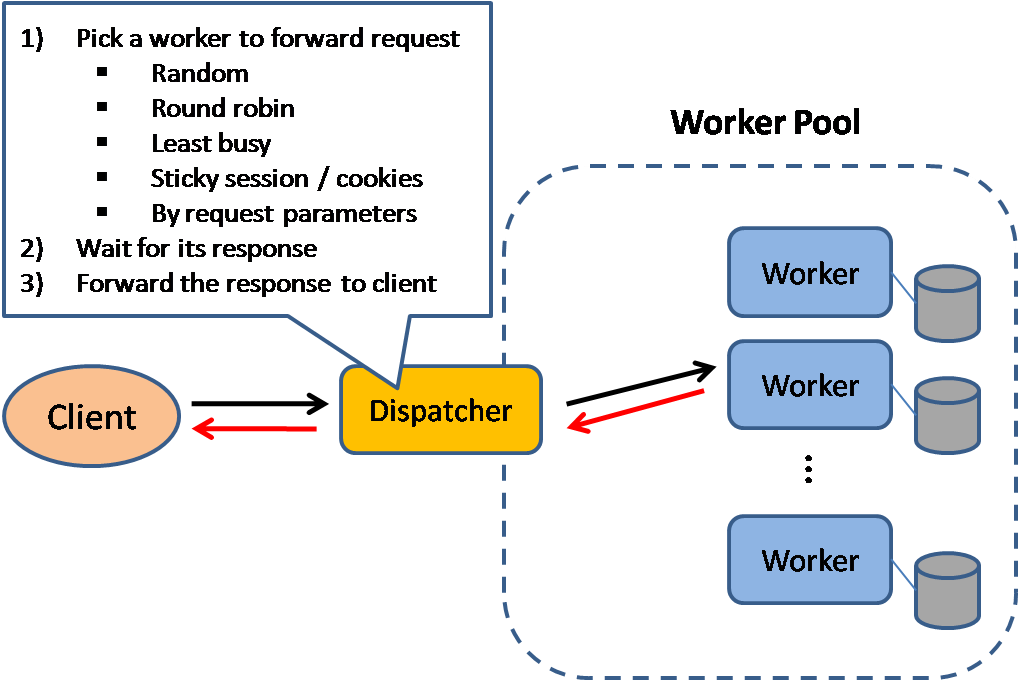

Load balancers distribute incoming client requests to computing resources such as application servers and databases. In each case, the load balancer returns the response from the computing resource to the appropriate client. Load balancers are effective at:

- Preventing requests from going to unhealthy servers

- Preventing overloading resources

- Helping to eliminate a single point of failure

Load balancers can be implemented with hardware (expensive) or with software such as HAProxy.

Additional benefits include:

- SSL termination - Decrypt incoming requests and encrypt server responses so backend servers do not have to perform these potentially expensive operations

- Removes the need to install X.509 certificates on each server

- Session persistence - Issue cookies and route a specific client's requests to same instance if the web apps do not keep track of sessions

To protect against failures, it's common to set up multiple load balancers, either in active-passive or active-active mode.

Load balancers can route traffic based on various metrics, including:

- Random

- Least loaded

- Session/cookies

- Round robin or weighted round robin

- Layer 4

- Layer 7

- Consistent Hashing

Layer 4 load balancing¶

Layer 4 load balancers look at info at the transport layer to decide how to distribute requests. Generally, this involves the source, destination IP addresses, and ports in the header, but not the contents of the packet. Layer 4 load balancers forward network packets to and from the upstream server, performing Network Address Translation (NAT).

Layer 7 load balancing¶

Layer 7 load balancers look at the application layer to decide how to distribute requests. This can involve contents of the header, message, and cookies. Layer 7 load balancers terminate network traffic, read the message, make a load-balancing decision, then open a connection to the selected server. For example, a layer 7 load balancer can direct video traffic to servers that host videos while directing more sensitive user billing traffic to security-hardened servers.

At the cost of flexibility, layer 4 load balancing requires less time and computing resources than layer 7, although the performance impact can be minimal on modern commodity hardware.

Consistent Hashing¶

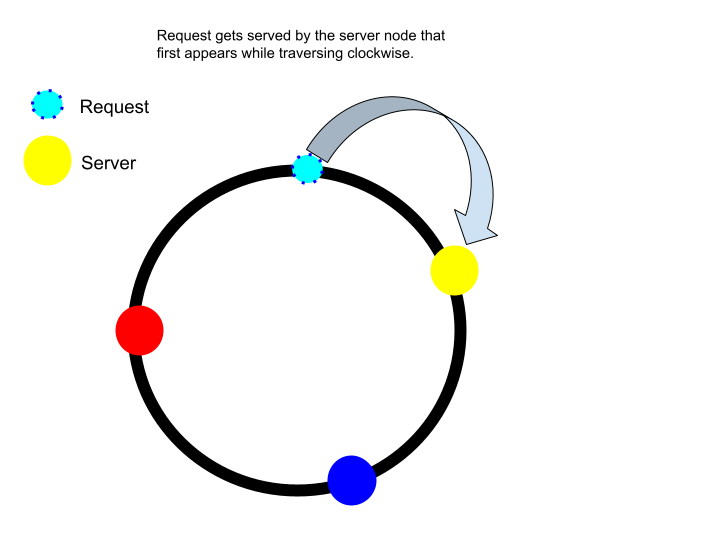

Consistent hashing is a technique used in load balancing to distribute requests evenly across multiple servers in a distributed system.

Here's how it works:

-

Hash Ring: Imagine a virtual circle, called a hash ring. Each server and each incoming request is assigned a position on this circle based on a hash function. The hash function ensures that the same input (server ID or request data) always gets mapped to the same position on the ring.

-

Request Distribution: When a request arrives, its data (often a unique identifier) is hashed to determine its position on the hash ring. The server responsible for handling the request is the one whose position on the ring comes after the hashed request data, continuing clockwise around the circle.

-

Scalability and Consistency: The key benefit of consistent hashing is its scalability. If a server is added or removed, only requests that map to the immediate vicinity of the affected server will be re-routed. Most requests will continue to be directed to the same servers as before. This minimizes data re-caching and avoids creating hotspots where certain servers become overloaded

Horizontal scaling¶

Load balancers can also help with horizontal scaling, improving performance and availability. Scaling out using commodity machines is more cost efficient and results in higher availability than scaling up a single server on more expensive hardware, called Vertical Scaling. It is also easier to hire for talent working on commodity hardware than it is for specialized enterprise systems.

Disadvantage(s): horizontal scaling¶

- Scaling horizontally introduces complexity and involves cloning servers

- Servers should be stateless: they should not contain any user-related data like sessions or profile pictures

- Sessions can be stored in a centralized data store such as a database (SQL, NoSQL) or a persistent cache (Redis, Memcached)

- Downstream servers such as caches and databases need to handle more simultaneous connections as upstream servers scale out

Disadvantage(s): load balancer¶

- The load balancer can become a performance bottleneck if it does not have enough resources or if it is not configured properly.

- Introducing a load balancer to help eliminate a single point of failure results in increased complexity.

- A single load balancer is a single point of failure, configuring multiple load balancers further increases complexity.

Source(s) and further reading¶

- NGINX architecture

- HAProxy architecture guide

- Scalability

- Wikipedia

- Layer 4 load balancing

- Layer 7 load balancing

- ELB listener config